Pragmatic verification and validation of industrial executable SysML models

- Journal: Systems Engineering

- Year: 2023

Summary

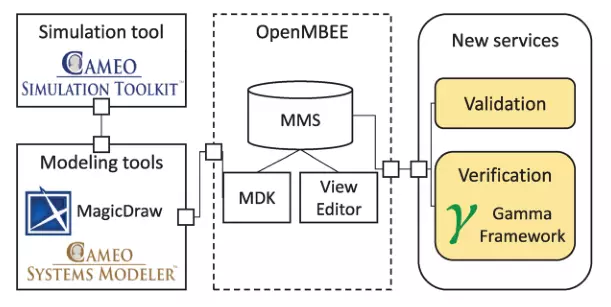

We proposed a subset of the SysML language that can be validated and verified using a pragmatic approach, and (ii) a cloud-based V&V framework for this subset, lifting verification to an industrial scale. We demonstrate the feasibility of our approach on an industrial-scale model from the aerospace domain and summarize the lessons learned during transitioning formal verification tools to an industrial context.

Assessing the specification of modelling language semantics: a study on UML PSSM

- Journal: Software Quality Journal

- Year: 2023

Summary

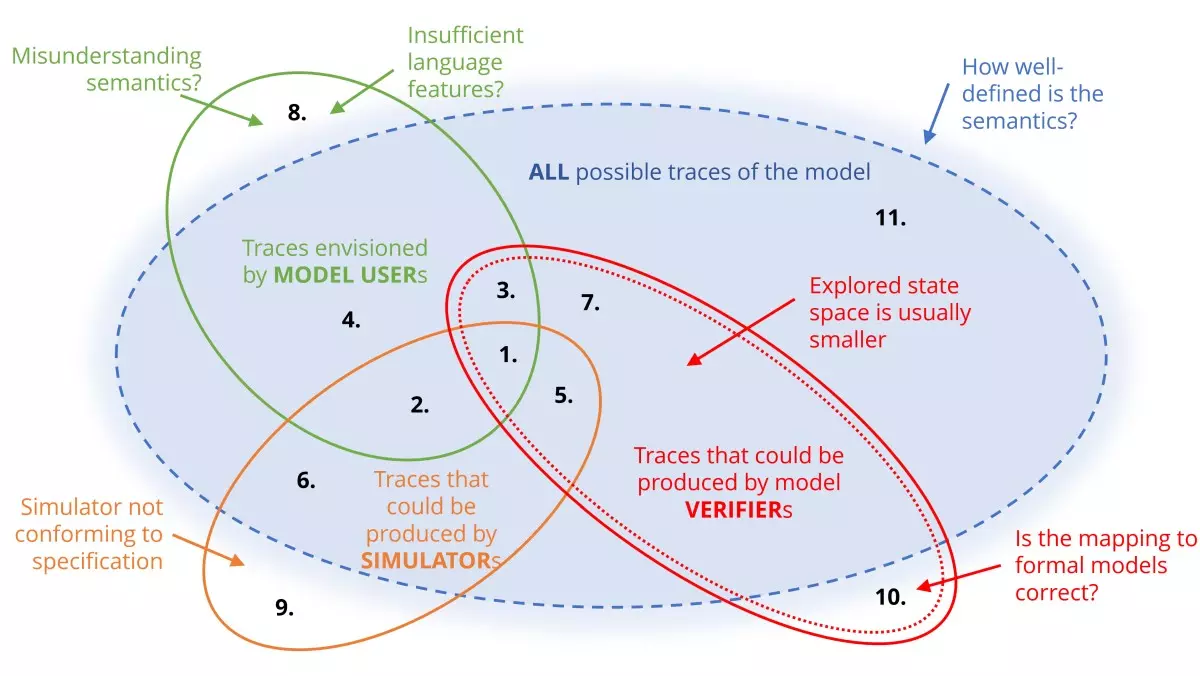

We investigated the challenges and typical issues with assessing the specifications of behavioural modelling language semantics. Our key insight is that the various stakeholder's understandings of the language's semantics are often misaligned, and the semantics defined in various artefacts (simulators, test suites) are inconsistent.

The many meanings of UML Sequence Diagrams: a survey

- Journal: Soft Syst Model

- Year: 2011

Summary

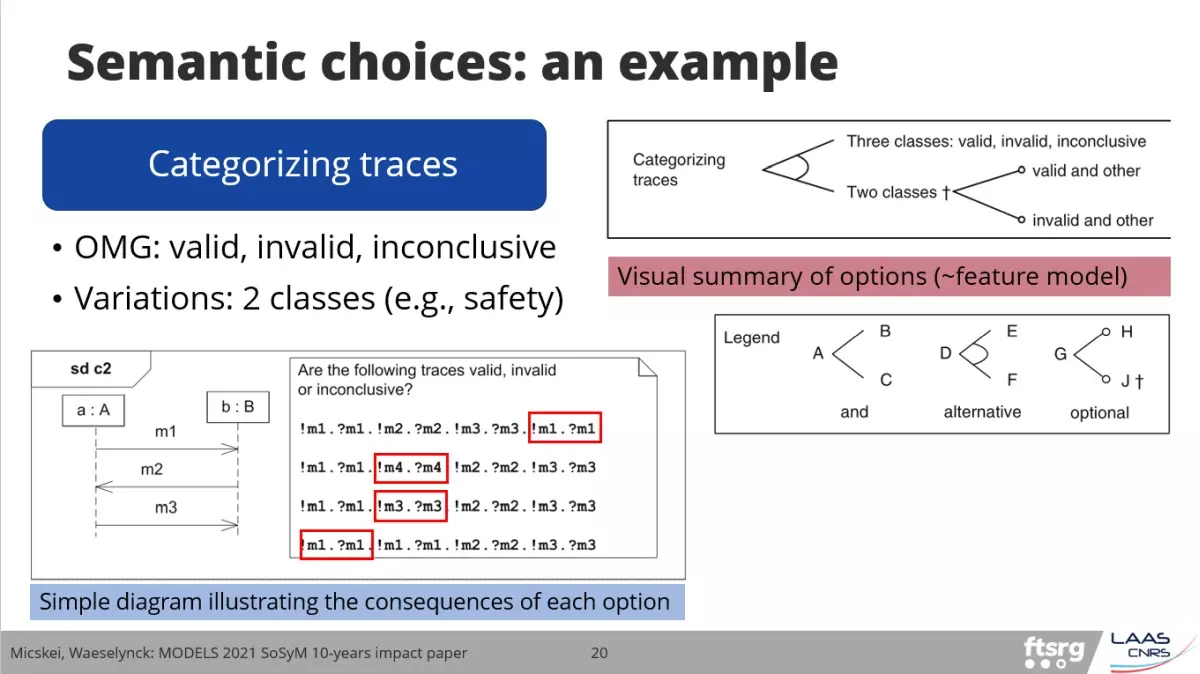

We collected and categorized the semantic choices in UML 2 Sequence Diagrams, surveyed the formal semantics proposed for the language, and presented how these approaches handle the various semantic choices. We hope that it will be helpful as well to other UML practitioners searching for a suitable semantics or wanting to define a new semantics in their own problem domain.

Classifying generated white-box tests: an exploratory study

- Journal: Software Quality Journal

- Year: 2019

Summary

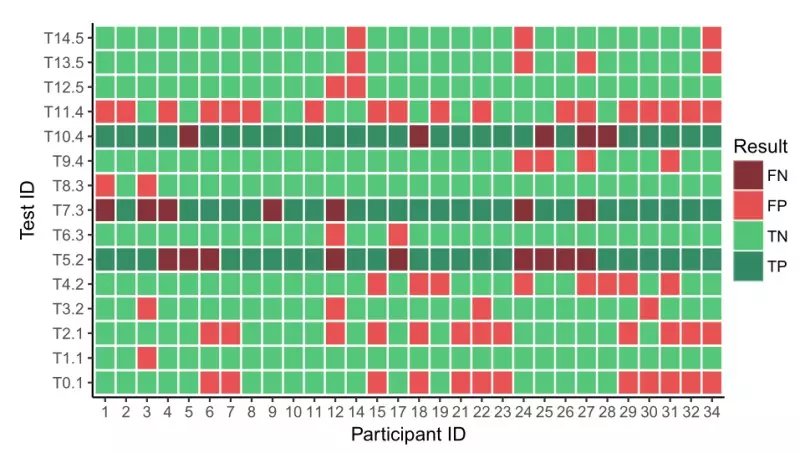

We designed an exploratory study with 106 participants to provide insights about how well developers perform in classifying whether an automatically generated test captures the expected or unexpected behavior. The results showed that participants tend to incorrectly classify tests. We recommended a conceptual framework to describe the classification task and suggested taking this problem into account when using or evaluating white-box test generators.

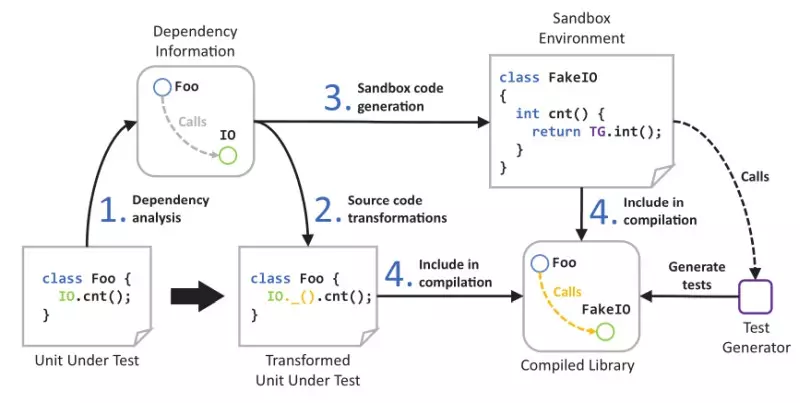

Automated isolation for white-box test generation

- Journal: Inform Software Tech

- Year: 2020

Summary

We presented an automated approach addressing the external dependency challenge for white-box test generation. The technique isolates the test generation and execution by transforming the code under test and creating a parameterized sandbox with generated mocks. We implemented the approach in a ready-to-use tool using Microsoft Pex as a test generator, and evaluated it on 10 open-source projects.

Evaluating code-based test input generator tools

- Journal: Softw Test Verif Reliab.

- Year: 2007

Summary

To evaluate test input generators, we collected a set of programming language concepts that should be handled by these tools and mapped these core concepts and challenging features to 363 code snippets. We created an automated framework called SETTE to execute and evaluate these snippets. The results highlight the strengths and weaknesses of each tool and approach and identify hard code parts that are difficult to tackle for most of the tools.