Robustness testing

Definition: Robustness is defined as the degree to which a system

operates correctly in the presence of exceptional inputs or

stressful environmental conditions. [IEEE Std 24765:2010]

Goal: The goal of robustness testing is to develop test cases

and test environments where a system's robustness can be assessed.

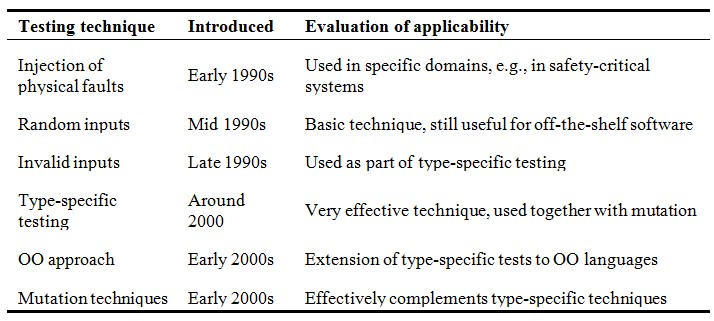

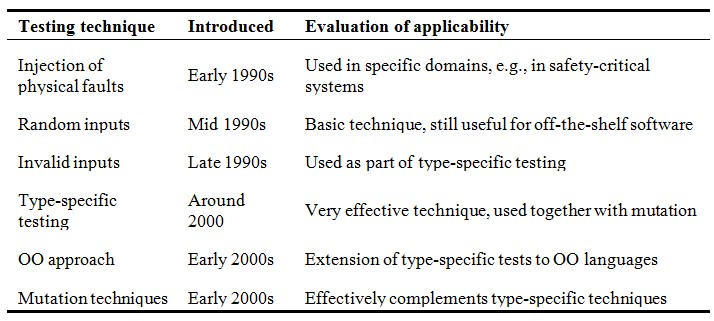

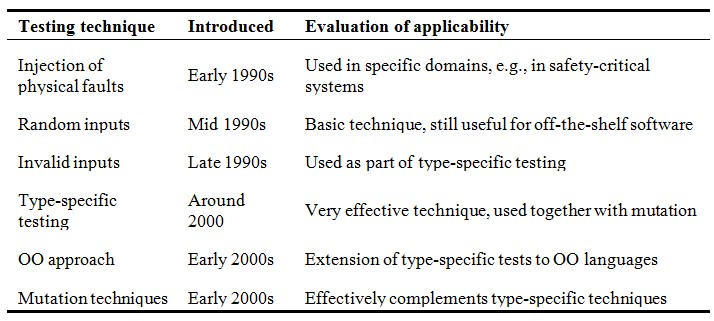

Robustness testing approaches

Many research projects worked on this topic, the two major approaches are:

I. Interface robustness testing: bombarding

the public interface of the application/system/API with valid and exceptional

inputs. The success criteria is in most cases: "if it does not crash or hang,

then it is robust", hence no oracle is needed for the testing. Examples:

-

Fuzz: Fuzz used a

simple method (randomly generated string) to test the robustness of Unix

console applications. They repeated their original experiment (1990) in

1995, and applied the method also for X-Window applications. The results

were distressing, originally approximately 40% of the applications tested

could be crashed with this method, and many of the reported robustness

errors remained even after five years. In 2000 they conducted a third

experiment with Windows 2000 applications. The method was similar, randomly

generated mouse and keyboard events were supplied to the programs.

The source code of the testing tools can be downloaded from Fuzz's homepage.

- Ballista:

in Ballista the robustness of the Posix API implementation was tested. They

conducted a great number of experiments and compared 15 Unix versions. Later

the test suite was implemented for Windows systems also. Part of the POSIX

test suite can be downloaded from their website. Over the years a lot of

publications appeared concerning Ballista. A good introduction is the

brochure and the "Software

Robustness Evaluation" slides.

Ballista suggested quite a few good ideas and techniques, and they carried

out a lot of well-documented experiments, it is worth to see.

- JCrasher:

JCrasher is a tool to generate robustness tests from Java byte code in form

of JUnit tests. Novel approaches implemented in a nice tool, which can be

downloaded even as an Eclipse plug-in.

- PROTOS (Security Testing of Protocol Implementations):

The PROTOS project analyzes the robustness and security aspects of protocols. Among the papers they published a test suite for WAP. The project was split into a research project (PROTOS Genome) and a commercial tool called Codenomicon DEFENSICS.

More information

- Z. Micskei, H. Madeira, A. Avritzer, I. Majzik, M. Vieira, N. Antunes: Robustness Testing Techniques and Tools. In: K. Wolter et al. (eds.). Resilience Assessment and Evaluation of Computing Systems, pp. 323-339, Springer-Verlag, 2012. DOI: 10.1007/978-3-642-29032-9_16

- Philip Koopman, Kobey Devale, John Devale. Chapter 11. Interface Robustness Testing: Experience and Lessons Learned from the Ballista Project. In: Karama Kanoun, Lisa Spainhower (eds.). Dependability Benchmarking for Computer Systems. ISBN: 978-0-470-23055-8. August 2008, (Online draft)

II. Dependability benchmarking: the aim

is to develop a public benchmark specification which focuses on evaluating the

dependability of the system. It is a much broader field, than robustness, it

contains the other attributes of dependability like availability and

maintainability. The common method is to create a workload, which

resembles the normal operation of the system under benchmark. Then define a

faultoad, which contains typical faults (hardware, software, operator, etc.) and

the exact time period when they should be instered. The specification includes

also what dependability measures should be collected.

- DBench: the

goal of the EU project DBench was to produce guidelines for

developing dependability benchmarks.

Along the general guidelines and background research they developed also

concrete benchmarks also:

- OLTP benchmarks: 4 configurations of Oracle DBMS compared in the paper "Benchmarking the Dependability of Different OLTP Systems" (DOI: 10.1109/DSN.2003.1209940)

- Webserver benchmarks:

compared Abyss and Apache webservers, faultload simulated typical programmer

errors ("Dependability Benchmarking of Web-Servers", DOI: 10.1007/978-3-540-30138-7_25)

- OS benchmarks: benchmarked 6 Windows and 4 Linux versions using the

PostMark file benchmark as a load ("Benchmarking the dependability of Windows and Linux using PostMark/spl trade/ workloads", DOI: 10.1109/ISSRE.2005.13)

-

IBM Autonomic

Computing Benchmark: similar to the DBench-OLTP, but uses

SPECjAppServer2004 as a workload and focuses on the resiliency of the system

to various disturbances.

More information

- Karama Kanoun and Lisa Spainhower, eds. Dependability Benchmarking for Computer Systems. Wiley-IEEE Computer Society Press, 2008. ISBN: 9780470230558. DOI:

10.1002/9780470370506

- Ali Shahrokni, Robert Feldt. A systematic review of software robustness, Information and Software Technology, Volume 55, Issue 1, January 2013, pp. 1-17, DOI: 10.1016/j.infsof.2012.06.002.

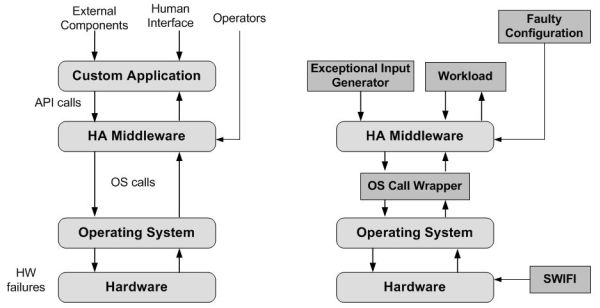

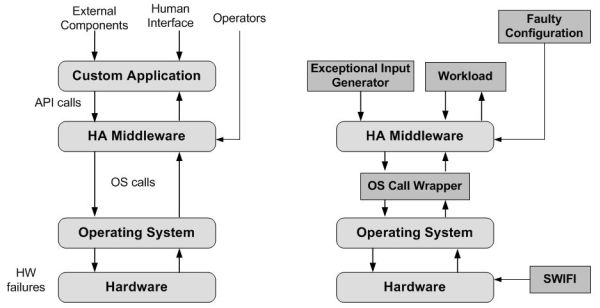

Our robustness testing experiments of HA middleware

Together with Nokia Research Center we were working on the robustness testing of HA middleware

systems. The general idea is illustrated on the following figure. On the left side you can see the typical fault sources of an HA middleware, on the right side the means to test against these fault sources.

Publications:

Test suite and results:

Last modified: 2013. 10. 02.